Elon Musk’s company xAI has launched Grok 4, which has been recognized as the world’s most powerful AI model to date.

The launch was highlighted by a livestream event, where the AI’s capabilities were demonstrated, including its potential to assist in coding and its advanced problem-solving abilities.

Elon musk stated in an interview: “Grok 4 is the smartest AI in the world. It will get perfect results on the SAT and near perfect results on any GED test. All questions it has never seen before. Grok 4 is smarter than almost all graduate students in all disciplines simultaneously.”

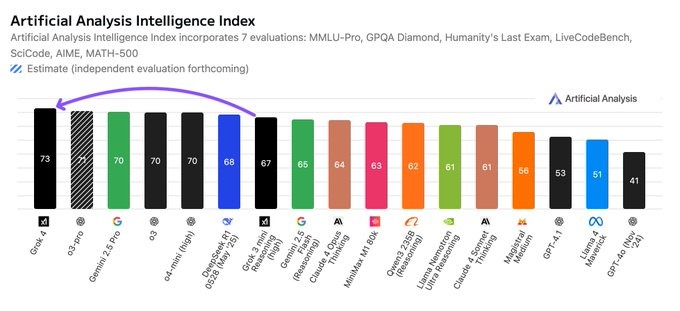

Tech enthusiasts are excited about its implications for technological and scientific advancements and confirming Musk’s summation, @ArtificialAnlys (Independent analysis of AI models and hosting providers) wrote: “xAI gave us early access to Grok 4 – and the results are in. Grok 4 is now the leading AI model.

“We have run our full suite of benchmarks and Grok 4 achieves an Artificial Analysis Intelligence Index of 73, ahead of OpenAI o3 at 70, Google Gemini 2.5 Pro at 70, Anthropic Claude 4 Opus at 64 and DeepSeek R1 0528 at 68. Full results breakdown below.

“This is the first time that @elonmusk’s @xai has the lead the AI frontier. Grok 3 scored competitively with the latest models from OpenAI, Anthropic and Google – but Grok 4 is the first time that our Intelligence Index has shown xAI in first place.

“We tested Grok 4 via the xAI API. The version of Grok 4 deployed for use on X/Twitter may be different to the model available via API. Consumer application versions of LLMs typically have instructions and logic around the models that can change style and behavior.

“Grok 4 is a reasoning model, meaning it ‘thinks’ before answering. The xAI API does not share reasoning tokens generated by the model.

“Grok 4’s pricing is equivalent to Grok 3 at $3/$15 per 1M input/output tokens ($0.75 per 1M cached input tokens). The per-token pricing is identical to Claude 4 Sonnet, but more expensive than Gemini 2.5 Pro ($1.25/$10, for <200K input tokens) and o3 ($2/$8, after recent price decrease). We expect Grok 4 to be available via the xAI API, via the Grok chatbot on X, and potentially via Microsoft Azure AI Foundry (Grok 3 and Grok 3 mini are currently available on Azure).

Key benchmarking results:

➤ Grok 4 leads in not only our Artificial Analysis Intelligence Index but also our Coding Index (LiveCodeBench & SciCode) and Math Index (AIME24 & MATH-500)

➤ All-time high score in GPQA Diamond of 88%, representing a leap from Gemini 2.5 Pro’s previous record of 84%

➤ All-time high score in Humanity’s Last Exam of 24%, beating Gemini 2.5 Pro’s previous all-time high score of 21%. Note that our benchmark suite uses the original HLE dataset (Jan ’25) and runs the text-only subset with no tools

➤ Joint highest score for MMLU-Pro and AIME 2024 of 87% and 94% respectively

➤ Speed: 75 output tokens/s, slower than o3 (188 tokens/s), Gemini 2.5 Pro (142 tokens/s), Claude 4 Sonnet Thinking (85 tokens/s) but faster than Claude 4 Opus Thinking (66 tokens/s)

Other key information:

➤ 256k token context window. This is below Gemini 2.5 Pro’s context window of 1 million tokens, but ahead of Claude 4 Sonnet and Claude 4 Opus (200k tokens), o3 (200k tokens) and R1 0528 (128k tokens)

➤ Supports text and image input

➤ Supports function calling and structured outputs

Leave a Reply